Welcome to Shanshan Blog!

-

Leetcode (500) String Series III

Leetcode String Series III

Leetcode 500. Keyboard Row

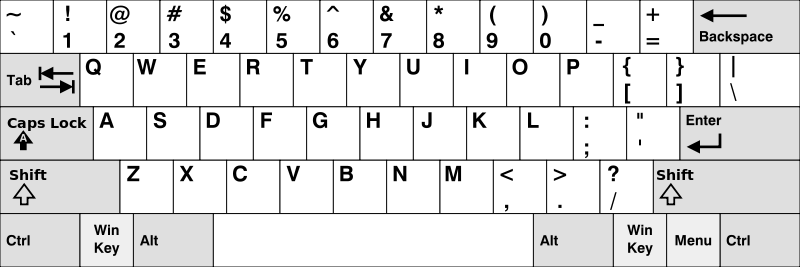

Given a List of words, return the words that can be typed using letters of alphabet on only one row’s of American keyboard like the image below.

Example 1:

Input: ["Hello", "Alaska", "Dad", "Peace"] Output: ["Alaska", "Dad"]class Solution(object): def findWords(self, words): """ :type words: List[str] :rtype: List[str] """ return [word for row in [set('qwertyuiop'), set('asdfghjkl'), set('zxcvbnm')] for word in words if set(word.lower()) <= row]

-

Leetcode (98, 99, 543) Binary Tree Series II

- Leetcode 98. Validate Binary Search Tree

- Leetcode 99. Recover Binary Search Tree

- Leetcode 543. Diameter of Binary Tree

Leetcode Binary Tree Series II

Leetcode 98. Validate Binary Search Tree

Given a binary tree, determine if it is a valid binary search tree (BST).

Assume a BST is defined as follows:

-

The left subtree of a node contains only nodes with keys less than the node’s key.

-

The right subtree of a node contains only nodes with keys greater than the node’s key.

-

Both the left and right subtrees must also be binary search trees.

Example 1:

2 / \ 1 3Binary tree [2,1,3], return true.

Example 2:

1 / \ 2 3Binary tree [1,2,3], return false.

/** * Definition for a binary tree node. * struct TreeNode { * int val; * TreeNode *left; * TreeNode *right; * TreeNode(int x) : val(x), left(NULL), right(NULL) {} * }; */ class Solution { public: bool isValidBST(TreeNode* root) { return helper(root, NULL, NULL); } bool helper(TreeNode* root, TreeNode* minN, TreeNode* maxN) { if (!root) return true; if (minN && root->val <= minN->val || maxN && root->val >= maxN->val) return false; return helper(root->left, minN, root) && helper(root->right, root, maxN); } };Leetcode 99. Recover Binary Search Tree

Two elements of a binary search tree (BST) are swapped by mistake.

Recover the tree without changing its structure.

Note:

A solution using O(n) space is pretty straight forward. Could you devise a constant space solution?

/** * Definition for a binary tree node. * struct TreeNode { * int val; * TreeNode *left; * TreeNode *right; * TreeNode(int x) : val(x), left(NULL), right(NULL) {} * }; */ class Solution { public: TreeNode* one = NULL; TreeNode* two = NULL; TreeNode* pre = new TreeNode(INT_MIN); void recoverTree(TreeNode* root) { help(root); swap(one->val, two->val); } void help(TreeNode* root) { if (!root) return; help(root->left); if (!one && pre->val >= root->val) one = pre; if (one && pre->val >= root->val) two = root; pre = root; help(root->right); } };Leetcode 543. Diameter of Binary Tree

Given a binary tree, you need to compute the length of the diameter of the tree. The diameter of a binary tree is the length of the longest path between any two nodes in a tree. This path may or may not pass through the root.

Example:

Given a binary tree

1 / \ 2 3 / \ 4 5Return 3, which is the length of the path [4,2,1,3] or [5,2,1,3].

通过递归计算左右子树的深度来计算根节点的diameter(记为temp),通过和类变量 diameter 进行比较,保存较大值。在每一次递归结束后,返回左右子树的深度,将二者相加再加2就是根节点的diameter。

/** * Definition for a binary tree node. * struct TreeNode { * int val; * TreeNode *left; * TreeNode *right; * TreeNode(int x) : val(x), left(NULL), right(NULL) {} * }; */ class Solution { public: int res = 0; int getHeight(TreeNode* root) { if (!root) return -1; int leftHeight = getHeight(root->left); int rightHeight = getHeight(root->right); int temp = leftHeight+rightHeight+2; res = max(res, temp); return max(leftHeight, rightHeight)+1; } int diameterOfBinaryTree(TreeNode* root) { getHeight(root); return res; } };

-

Pig Tutorial

- Mode

- load and store data

- shell command, except cd

- Diagnostic

- Group

- Join

- Cross 向量积

- UNION, SPLIT, Distinct

- Filter, Foreach, Order, Limit

- Functions

Mode

# pig local mode pig -x local # pig mapreduce mode pig -x mapreduce pig -x mapreduce Sample_script.pigload and store data

records= load 'hdfs://localhost:9000/input/sample_small.txt' using com.oserp.pigudf.WordCountLoadFunc() as (words:bag{word:(w:chararray)}); A = load '/data/cupid_algo/workspace/shanshan/dt=2017-04-16' using parquet.pig.ParquetLoader(); STORE records INTO ' hdfs://localhost:9000/pig_Output/ ' USING PigStorage (',');shell command, except cd

sh ls; # clear the screen clear;Diagnostic

# show the data DUMP records; # show the schema DESCRIBE records; # show the logical, physical relations EXPLAIN records; # execute step by step ILLUSTRATE records;Group

# groupby Group_data = GROUP records BY age; Group_data = GROUP records BY (age, sex); Group_all = GROUP records BY ALL; # COGROUP two more relations cogroup_data = COGROUP student_details by age, employee_details by age;Join

# inner join Relation3_name = JOIN Relation1_name BY key, Relation2_name BY key ; # left join Relation3_name = JOIN Relation1_name BY id LEFT OUTER, Relation2_name BY customer_id; # right join outer_right = JOIN customers BY id RIGHT, orders BY customer_id; # full join outer_full = JOIN customers BY id FULL OUTER, orders BY customer_id; # multiple key emp = JOIN employee BY (id,jobid), employee_contact BY (id,jobid);Cross 向量积

Relation3_name = CROSS Relation1_name, Relation2_name;UNION, SPLIT, Distinct

student = UNION student1, student2; SPLIT student_details into student_details1 if age<23, student_details2 if (22<age and age>25); # delete the duplicate distinct_data = DISTINCT student_details;Filter, Foreach, Order, Limit

# filter data filter_data = FILTER student_details BY city == 'Chennai'; # for each foreach_data = FOREACH student_details GENERATE id,age,city; # get limited data Result = LIMIT Relation_name required number of tuples; # order order_by_data = ORDER student_details BY age DESC;Functions

Pig-Latin AVG() 函数用于计算包内数值的平均值。在计算平均值时, AVG() 函数会忽略NULL值。

-

要获取全局平均值,我们需要执行Group All操作,并使用 AVG() 函数计算平均值。

-

要获取组的平均值,我们需要使用Group By运算符对其进行分组,然后继续使用average函数。

# eval func student_gpa_avg = foreach student_group_all Generate # get average student_gpa_avg = foreach student_group_all Generate (student_details.firstname, student_details.gpa), AVG(student_details.gpa); # some functions # bagtostring: transfer bag to string # concat: two expressions # MAX, MIN, COUNT, ISEMPTY, SUM, SUBTRACT # TOBAG, TOTUPLE, TOMAP, TOP # other string, numeric, date, time functions ## Execute pig Scriptexec sample_script.pig pig -x mapreduce hdfs://localhost:9000/pig_data/Sample_script.pig ```

-

Spark SQL Tutorial

Overview

Spark SQL is a Spark module for structured data processing.

SQL

One use of Spark SQL is to execute SQL queries. Spark SQL can also be used to read data from an existing Hive installation. When running SQL from within another programming language the results will be returned as a Dataset/DataFrame.

** Datasets and DataFrames A Dataset is a distributed collection of data. The Dataset API is available in Scala and Java.

A DataFrame is a Dataset organized into named columns. It is conceptually equivalent to a table in a relational database or a data frame in R/Python, but with richer optimizations under the hood. DataFrames can be constructed from a wide array of sources such as: structured data files, tables in Hive, external databases, or existing RDDs. The DataFrame API is available in Scala, Java, Python, and R. In Scala and Java, a DataFrame is represented by a Dataset of Rows. In the Scala API, DataFrame is simply a type alias of Dataset[Row]. While, in Java API, users need to use Dataset

to represent a DataFrame.

Get Start

Starting Point: SparkSession

import org.apache.spark.sql.SparkSession val spark = SparkSession .builder() .appName("Spark SQL basic example") .config("spark.some.config.option", "some-value") .getOrCreate() // For implicit conversions like converting RDDs to DataFrames import spark.implicits._Create Dataframe

``scala val df = spark.read.json(“examples/src/main/resources/people.json”)

// Displays the content of the DataFrame to stdout df.show()

**Dataframe Operations** ```scala import spark.implicits._ df.printSchema() //select "name" column df.select("name").show() df.select($"name", $"age" + 1).show() //filter df.filter($"age" > 21).show() //groupby count df.groupBy("age").count().show()For more operations, you can see the Dataset AIP

Running SQL Queries

// Register the DataFrame as a SQL temporary view df.createOrReplaceTempView("people") val sqlDF = spark.sql("SELECT * FROM people") sqlDF.show()Create Datasets

Datasets are similar to RDDs, however, instead of using Java serialization or Kryo they use a specialized Encoder to serialize the objects for processing or transmitting over the network. While both encoders and standard serialization are responsible for turning an object into bytes, encoders are code generated dynamically and use a format that allows Spark to perform many operations like filtering, sorting and hashing without deserializing the bytes back into an object.

case class Person(name: String, age: Long) // Encoders are created for case classes val caseClassDS = Seq(Person("Andy", 32)).toDS() caseClassDS.show() // Encoders for most common types are automatically provided by importing spark.implicits._ val primitiveDS = Seq(1, 2, 3).toDS() primitiveDS.map(_ + 1).collect() // Returns: Array(2, 3, 4) // DataFrames can be converted to a Dataset by providing a class. Mapping will be done by name val path = "examples/src/main/resources/people.json" val peopleDS = spark.read.json(path).as[Person] peopleDS.show()Interoperating with RDDs

Spark SQL supports two different methods for converting existing RDDs into Datasets. The first method uses reflection to infer the schema of an RDD that contains specific types of objects. This reflection based approach leads to more concise code and works well when you already know the schema while writing your Spark application.

The second method for creating Datasets is through a programmatic interface that allows you to construct a schema and then apply it to an existing RDD. While this method is more verbose, it allows you to construct Datasets when the columns and their types are not known until runtime.

- Inferring the Schema Using Reflection

The Scala interface for Spark SQL supports automatically converting an RDD containing case classes to a DataFrame. The case class defines the schema of the table.

import org.apache.spark.sql.catalyst.encoders.ExpressionEncoder import org.apache.spark.sql.Encoder // For implicit conversions from RDDs to DataFrames import spark.implicits._ // Create an RDD of Person objects from a text file, convert it to a Dataframe val peopleDF = spark.sparkContext .textFile("examples/src/main/resources/people.txt") .map(_.split(",")) .map(attributes => Person(attributes(0), attributes(1).trim.toInt)) .toDF() // Register the DataFrame as a temporary view peopleDF.createOrReplaceTempView("people") // SQL statements can be run by using the sql methods provided by Spark val teenagersDF = spark.sql("SELECT name, age FROM people WHERE age BETWEEN 13 AND 19") // The columns of a row in the result can be accessed by field index teenagersDF.map(teenager => "Name: " + teenager(0)).show() // or by field name teenagersDF.map(teenager => "Name: " + teenager.getAs[String]("name")).show() // row.getValuesMap[T] retrieves multiple columns at once into a Map[String, T] teenagersDF.map(teenager => teenager.getValuesMap[Any](List("name", "age"))).collect()- Programmatically Specifying the Schema

When case classes cannot be defined ahead of time (for example, the structure of records is encoded in a string, or a text dataset will be parsed and fields will be projected differently for different users), a DataFrame can be created programmatically with three steps.

-

Create an RDD of Rows from the original RDD;

-

Create the schema represented by a StructType matching the structure of Rows in the RDD created in Step 1.

-

Apply the schema to the RDD of Rows via createDataFrame method provided by SparkSession.

import org.apache.spark.sql.types._ // Create an RDD val peopleRDD = spark.sparkContext.textFile("examples/src/main/resources/people.txt") // The schema is encoded in a string val schemaString = "name age" // Generate the schema based on the string of schema val fields = schemaString.split(" ") .map(fieldName => StructField(fieldName, StringType, nullable = true)) val schema = StructType(fields) // Convert records of the RDD (people) to Rows val rowRDD = peopleRDD .map(_.split(",")) .map(attributes => Row(attributes(0), attributes(1).trim)) // Apply the schema to the RDD val peopleDF = spark.createDataFrame(rowRDD, schema) // Creates a temporary view using the DataFrame peopleDF.createOrReplaceTempView("people") // SQL can be run over a temporary view created using DataFrames val results = spark.sql("SELECT name FROM people") // The results of SQL queries are DataFrames and support all the normal RDD operations // The columns of a row in the result can be accessed by field index or by field name results.map(attributes => "Name: " + attributes(0)).show()Data Sources

- Generic Load/Save Functions

val usersDF = spark.read.load("examples/src/main/resources/users.parquet") usersDF.select("name", "favorite_color").write.save("namesAndFavColors.parquet")- Manually Specifying Options

val peopleDF = spark.read.format("json").load("examples/src/main/resources/people.json") peopleDF.select("name", "age").write.format("parquet").save("namesAndAges.parquet")- Run SQL

val sqlDF = spark.sql("SELECT * FROM parquet.`examples/src/main/resources/users.parquet`")Parquet Files

import spark.implicits._ val peopleDF = spark.read.json("examples/src/main/resources/people.json") // DataFrames can be saved as Parquet files, maintaining the schema information peopleDF.write.parquet("people.parquet") // Read in the parquet file created above val parquetFileDF = spark.read.parquet("people.parquet") // Parquet files can also be used to create a temporary view and then used in SQL statements parquetFileDF.createOrReplaceTempView("parquetFile") val namesDF = spark.sql("SELECT name FROM parquetFile WHERE age BETWEEN 13 AND 19") namesDF.map(attributes => "Name: " + attributes(0)).show()Hive Table

import org.apache.spark.sql.Row import org.apache.spark.sql.SparkSession case class Record(key: Int, value: String) // warehouseLocation points to the default location for managed databases and tables val warehouseLocation = "spark-warehouse" val spark = SparkSession .builder() .appName("Spark Hive Example") .config("spark.sql.warehouse.dir", warehouseLocation) .enableHiveSupport() .getOrCreate() import spark.implicits._ import spark.sql sql("CREATE TABLE IF NOT EXISTS src (key INT, value STRING)") sql("LOAD DATA LOCAL INPATH 'examples/src/main/resources/kv1.txt' INTO TABLE src") // Queries are expressed in HiveQL sql("SELECT * FROM src").show() // The results of SQL queries are themselves DataFrames and support all normal functions. val sqlDF = sql("SELECT key, value FROM src WHERE key < 10 ORDER BY key") // The items in DaraFrames are of type Row, which allows you to access each column by ordinal. val stringsDS = sqlDF.map { case Row(key: Int, value: String) => s"Key: $key, Value: $value" } stringsDS.show() // You can also use DataFrames to create temporary views within a SparkSession. val recordsDF = spark.createDataFrame((1 to 100).map(i => Record(i, s"val_$i"))) recordsDF.createOrReplaceTempView("records") // Queries can then join DataFrame data with data stored in Hive. sql("SELECT * FROM records r JOIN src s ON r.key = s.key").show()

-

计算广告学 笔记4.3 流量预测和ZooKeeper

课程地址:计算广告学

课时22 流量预测

在前面合约式广告中提到过合约式广告中有三个基本技术,1. 受众定向,2. 点击预测,3. 流量预测。在这三个技术基础上才可以做Online Allocation,本节主要介绍流量预测。

在定向条件分的比较粗的情况下,流量预测是比较简单的,比如只对人群分了几十个类,那么只需要进行简单的统计就可以进行流量预测了。

这里只介绍一种简单的,但很有启发意义的方法。这种方法是将查询视为a,文档集是(u,c)的一个反向检索的过程。与之相对的是在广告检索的过程中,查询是(u,c),文档集是a。这种方法对u或c进行检索,所检索出的数量也就是流量的大小。之所以要这样做的原因是,广告的定向条件的可能组合非常多,不可能通过简单的统计来完成。

因为(u,c)的联合空间规模过大,无法直接对联合空间进行处理,也没有必要这样。所以需要对u和c分别处理。即分别对受众和页面建索引,用广告进行索引,可以得到满足定向的受众和页面量分别是多少。

课时23 ZooKeeper 介绍

举一个使用场景,比如我们有很多台Index,或者有很多台Ad Server,其中有一些机器崩溃了,或是有一些机器新添加进了集群,如何用一个分布式的管理系统通知其它相关模块,哪些机器是可以用的。ZooKeeper就是解这种问题的,ZooKeeper是在基于消息传递通信模型的分布式环境下解决一致性问题的基础服务。

Paxos算法

Paxos 算法解决的问题是一个分布式系统如何就某个值(决议)达成一致。一个典型的场景是,在一个分布式数据库系统中,如果各节点的初始状态一致,每个节点执行相同的操作序列,那么他们最后能得到一个一致的状态。为保证每个节点执行相同的命令序列,需要在每一条指令上执行一个“一致性算法”以保证每个节点看到的指令一致。一个通用的一致性算法可以应用在许多场景中,是分布式计算中的重要问题。因此从20世纪80年代起对于一致性算法的研究就没有停止过。节点通信存在两种模型:共享内存(Shared memory)和消息传递(Messages passing)。Paxos 算法就是一种基于消息传递模型的一致性算法。

-

计算广告学 笔记4.2 广告检索

课程地址:计算广告学

课时21 广告检索

广告检索谈论两点:

1.布尔表达式检索,2. 长Query,它在上下文广告时会用到。

广告投放条件过滤可以视为布尔表达式检索问题。它不同于web搜索中关键词的搜索。比如广告主投放条件可能是年龄属于第三年龄段,地区是纽约,或是地区是加州,性别是男性的用户。可以用(age∈{3}^state∈{NY})∨(state∈{CA}^gender∈{M})表示。广告定向条件的DNF是一组或的条件,即某种定向条件或是某种定向条件下,广告可以展示。DNF可以分解成Conjunction, Conjunction是按或的方式分开,并且同一定向条件在某Conjunction里只出现一次,比如上面的例子就可以分解age∈{3}^state∈{NY}和state∈{CA}^gender∈{M},这里state在两个Conjunction中就各出现了一次。Assignment是一组属性名称和值的集合{A1=v1, A2=v2, …},比如一个在加州的女性用户可能就有{gender=F, state=CA}的assignment,sizeof[Conjuction]是conjunction中∈的个数。这个是比较重要的一个定义,实际意义是一个广告的其中的定向条件个数。在上例的age∈{3}^state∈{NY}中,那么如果你的查询只有一个条件时,那么这个条件不可能满足这个定向条件,那么在检索的过程中可以借助这种方式进行一定的加速。Yahoo!的GD广告就是用的这种检索方式。

Index Boolean Expression论文中的基本思想是某查询满足conjunction,也就满足包含此conjunction的doc。索引维护两层倒排关系,Conjunction->DocId和Assignment->ConjunctionId,Conjunction->DocId是普通的倒排索引,Assigment->ConjunctionId可会考虑sizeof(Conjunction),如果sizeof(Conjunction)大于sizeof(query),则无需考虑。

Index算法

- 建立第一层 Index

遍历文档DNF的Conjunction,如果为新的,则分配一个新ID(从0递增),否则用之前分配的ConjunctionID;文档分配DocID(从0递增);写入conjunction到doc的倒排关系,形成第一层Index。

- 对于上步出现的新Conjunction,建立第二层 Index

** 将Conjunction切成Assignment流,Term为(属性, 值),例: age ∈ {3;4}切成两个Term: (age, 3), (age, 4);state ? {CA;NY}也切成两个Term: (state, CA), (state, NY),?和∈体现在倒排链表上

** 计算Conjunction的size,将size体现在Term中,最终的Term的组成是(sizeof[Conjunction], 属性, 值)

** 对于size为0的Conjunction,添加一个特殊的Term:Z,∈

** 写入倒排关系, Term -> (ConjunctionID, ∈ ?)+ 实例

下面是描述成DNF形式的广告集合。

doc1 = (age∈{3}^state∈{NY})∨(state∈{CA}^gender∈{M})=c1∨c4 doc2 = (age∈{3}^state∈{F})∨(state?{CA;NY})=c2∨c6 doc3 = (age∈{3}^gender∈{M}^state?{CA})∨(state∈{CA}^gender∈{F})=c3∨c7 doc4 = (age∈{3;4})∨(state∈{CA}^gender∈{M})=c5∨c4 doc5 = (state?{CA;NY})∨(age∈{3;4})=c6∨c5 doc7 = (age∈{3}^state∈{NY})∨(state∈{CA}^gender∈{F})=c1∨c7 doc6 = (state?{CA;NY})∨(age∈{3}^state∈{NY})∨(state∈{CA}^gender∈{M})= c6∨c1∨c4第一层倒排(conjunction->DocId)

c1 -> doc-1, doc-6, doc-7 c2 -> doc-2 c3 -> doc-3 c4 -> doc-1, doc-4, doc-7 c5 -> doc-4, doc-5 c6 -> doc-2, doc-5, doc-7 c7 -> doc-3, doc-6比如c1这个conjuction在doc-1,doc-6,doc-7中都有,所以c1的posting list就是这样的,这个倒排是为了得到广告的目的,比较简单。

第二层倒排(assignment->conjunction),它首先按size进行划分,0表示size=0的conjunction组成的倒排表,它里面只有不属于的情况,1表示conjunction只有一个属于的情况。以此类推。

在检索的时候,先查看sizeof(query),即查询中有几个定向条件,仅当查询的定向条件少于广告的定向条件时,才会查找广告,否则不用查找,这样可以减少查找的工作量。

长Query

做上下文广告时会用到,从要投放的页面中提取标签,到广告集合中匹配相应的广告,标签可能有几十到上百个,但这么多的标签对搜索引擎检索是很大的挑战。但所有的关键词都是Should的关系。它们即不是AND的关系,如果是AND的关系,可能根本就检索不到广告,也不是OR的关系,OR的关系会检索出大量的文档,对后面做相关性造成很大的压力。

传统搜索引擎中需需要将所有出现过以上Term的文档都取出来,然后计算相关性并找到Top-N,在长Query和大文档集时查询速度被巨大的计算量所限制,并且最终的效果也不会很好,Top-N中N的取值如果太大会给后续计算产生很大的压力,如果N太小可能会将一些优质的广告丢弃了。

一种可选的思路是:在查找候选DOC的过程中做一个近似的评估,切掉那些理论上不需要再考虑的文档,只对进候选的文档进行相关性计算,比Top-N最小堆最小值大的插入。理论上不需要再考虑的文档是由一个相关性函数来决定,相关性函数会判断一个query和一个文档的相关程度,当相当性函数为线性时,存在有效的剪枝算法。对于相关性函数是线性这个问题,大家不要太苛求,很显然在后续计算相关性时,不一定用线性函数,比如最常用的cosine distance也不是线性函数,但如果你使用相对合理的线性评价函数,使得retrieval的过程变得很有效,那么对ranking阶段的速度是很有帮助的。所以这种思路的核心是在线性函数的限制下找到一个相对合理的相关性函数。

WAND 算法

教程里没有详细说明,随后附上详细的讲解。